Hi Kirsten,

To my experience, when my colleagues look at delay numbers in automotive, they are ONLY interested in the complete delay, i.e. including the packet delay. For 10BASE-T1S e.g. the delay/latency is being discussed,

but not because the processing in the PHY takes any amount of time. It is because it takes 1.2336ms to receive a 1500 byte packet. I hope it is obvious, that it is (almost) irrelevant to look at the processing delay of the PHY in this case. Can we agree on

that?

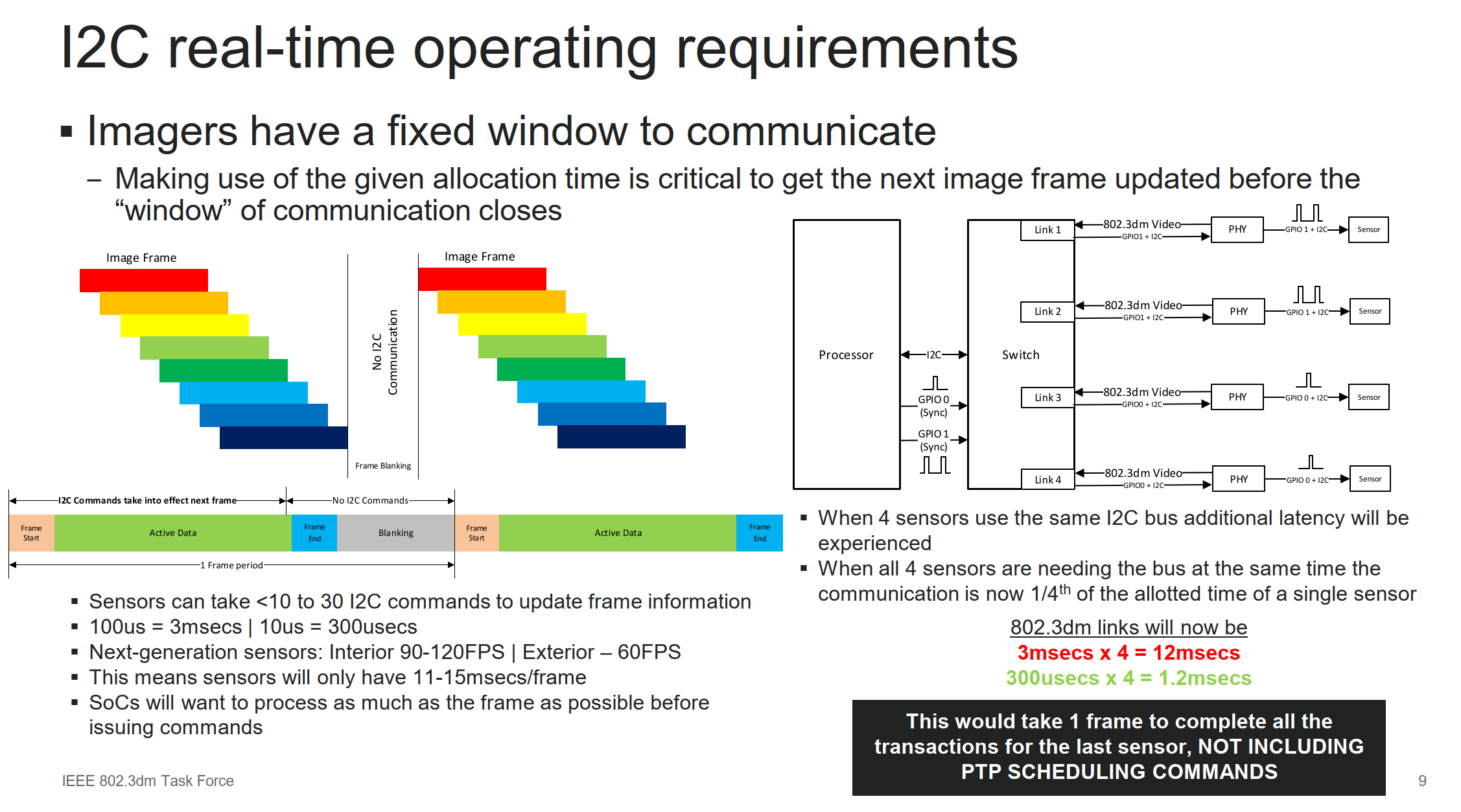

Why are we sending a 1500-byte packet on the control channel once the system is booted? There is no need for this massive packet. I’m afraid I have to disagree with that. Can you explain how

this would work for the application I outlined in slide 9? If these latencies are OK, you’re OK with dumping a frame and not making the proper updates on time. The same goes for slide 8. Can a system live with 1sec for the rest of the system? Add the other

camera links to the boot process, as I did in the slide. Several customers I have spoken with already said this is unacceptable for their system and future systems. This is a significant change to their existing systems for a solution that

works today.

I am having a hard time to follow the logic of what this discussion is about. As a customer, I want to know, when I can use the content of the packet for further processing. For 100Mbps this is dominated by

the 100Mbps interface rate. As a customer, I have no issues having even 100us in the UL for the camera control, again, as the cycle times for cameras/radars/lidars are in a different order of magnitude. I and other colleagues have brought in data that explains

the rational behind this. We have looked in detail at the type of control information and data that gets transferred to the camera. What is missing?

Many others do not agree with this that I have spoken with, and some would deem 802.3dm only usable in their system if significant changes are done, for which there is no near-term solution.

You’ve stated that you want to minimize changes in existing systems. What is trying to be agreed on is

over a x10 increase

to what is being used today. I’ve listed out two applications that need to be addressed with the systems today, and these have not been addressed. Why would a customer create a new interface and change their current infrastructure for something that

works today? The latencies I have pointed out are easily achievable, and several people on the committee have pointed this out as well.

Best Regards,

TJ

From: Matheus Kirsten, EE-352 <Kirsten.Matheus@xxxxxx>

Sent: Monday, October 7, 2024 7:35 AM

To: STDS-802-3-ISAAC@xxxxxxxxxxxxxxxxx

Subject: [EXTERNAL] [802.3_ISAAC] AW: [802.3_ISAAC] Real live example of why latency maters

Hi all, To my experience, when my colleagues look at delay numbers in automotive, they are ONLY interested in the complete delay, i. e. including the packet delay.

For 10BASE-T1S e. g. the delay/latency is being discussed, but not because the

Hi all,

To my experience, when my colleagues look at delay numbers in automotive, they are ONLY interested in the complete delay, i.e. including the packet delay. For 10BASE-T1S e.g. the delay/latency is being discussed,

but not because the processing in the PHY takes any amount of time. It is because it takes 1.2336ms to receive a 1500 byte packet. I hope it is obvious, that it is (almost) irrelevant to look at the processing delay of the PHY in this case. Can we agree on

that?

The 10-year old presentation cited, was looking at the requirements for 1000BASE-T1 in a backbone use case. To my understanding, also this presentation included in the 15us

the packet latency and PHY delay and not just the latency it took to process the data in the PHY. The packet delay for 1Gbps is 12.336us. The defined PHY delay max per spec is 7.168us, which together means a delay of about 20us >15us. I am not aware of a single

complaint in the industry that 1000BASE-T1 cannot be used in the backbone/between zones, because its delay/latency is too large.

So why are do we keep discussing this and what is so different for the 100Mbps UL for the camera use case? For 100Mbps the delay/latency is 123.36us for a the Ethernet packet size that is typically aspired

to be used. As I have shown in my presentation: For the highspeed DL the processing delay is in the same range as the packet delay. However, for the low speed UL the delay is dominated by the link speed. If you add a 1Mbps I2C on top of that, that is what

will dominate the delay, not the processing in the PHY (though I agree that startup is an item yet to discuss separately).

I am having a hard time to follow the logic of what this discussion is about. As a customer, I want to know, when I can use the content of the packet for further processing. For 100Mbps this is dominated by

the 100Mbps interface rate. As a customer, I have no issues having even 100us in the UL for the camera control, again, as the cycle times for cameras/radars/lidars are in a different order of magnitude. I and other colleagues have brought in data that explains

the rational behind this. We have looked in detail at the type of control information and data that gets transferred to the camera. What is missing?

Kind regards,

Kirsten

Von: William Lo <will@xxxxxxxxxx>

Gesendet: Freitag, 4. Oktober 2024 23:24

An: STDS-802-3-ISAAC@xxxxxxxxxxxxxxxxx

Betreff: Re: [802.3_ISAAC] Real live example of why latency maters

|

Sent from outside the BMW organization - be CAUTIOUS, particularly with links and attachments. |

Absender außerhalb der BMW Organisation - Bitte VORSICHT beim Öffnen von Links und Anhängen. |

Hi Kamal,

The presentation I referenced recommends the 15us when referring to automotive control loops

and I think it is relevant for both forward vs back channels since video data and control data forms a loop

to control the camera based on the image received. This data is especially relevant to the back channel

since the back channel is part of the control loop.

Anyway, my personal opinion is that we focus on what we are chartered to do and build consensus.

I feel a lot of the conversation going back and forth is nice to know but really outside the scope of the group.

I believe I am bringing in data that is in scope of what we need to consider.

I’m bring up a bunch of datapoints from past work in IEEE to the attention of the group who may not be aware

of past history so to help us make some decisions here. I recall the info we got in 802.3bp really informed that

group what the target needed to be and if you trace the history of the presentations in 802.3bp, the technical

decisions came quickly after the group had a latency target.

I’m hoping for the same here that this data can help us move forward in .3dm.

I respectfully disagree with your delay starting points. All BASE-T1 PHYs delays in the past fast or slow are easily under 15us.

So I don’t see why this number cannot be met in .3dm and why we shouldn’t try to do even better.

Thanks,

William

From: Kamal Dalmia <kamal@xxxxxxxxxxxxxx>

Sent: Thursday, October 3, 2024 18:54

To: William Lo <will@xxxxxxxxxx>;

STDS-802-3-ISAAC@xxxxxxxxxxxxxxxxx

Subject: Re: [802.3_ISAAC] Real live example of why latency maters

All,

I think it is premature to nail down 1 particular parameter this early on without having looked at various tradeoffs between PAM levels, echo-cancellation etc. in detail.

That being said, if we do want to start with some numbers, before we start quoting specific numbers, it should be noted that 802.3dm will be an Asymmetrical PHY. Past 802.3 automotive PHYs are symmetrical

in nature. Even though several of these past PHYs were justified for Camera Applications during their CFIs, hardly any are shipping in the Camera applications. This tells me that starting with past PHYs as a base would not be a good idea.

For asymmetrical PHYs targeted at camera/sensor/display applications, one should look at FORWARD (video) direction separately from REVERSE (control) direction.

Delivery of video with low latency is as desirable as reasonable latency for control data. This means we need two separate numbers, each optimized for its specific purpose. In the past there was only one number

per PHY due to symmetry.

May I suggest the following numbers as starting points –

FORWARD

Direction (Video data from Camera to SOC) – Max PHY “delay” of 2 microseconds (very valuable to have video delivered to the SOC as quickly as practical)

REVERSE

Direction (Control data from SOC to Camera) – Max PHY “delay” of 30 microseconds (keeping in mind that the long pole in this direction is I2C – as Scott and Steve have rightfully pointed out).

One number (such as 15 microseconds) without specifying the direction would not address the topic in a manner it should be addressed. The delay numbers should be different depending on the direction just as

the data rates adopted in the project’s objective differ depending on the direction.

Please note that I am using the term “delay” as opposed to “latency”. This is because “delay” is the only parameter that is within the scope of 802.3 PHYs. William has already pointed to this parameter in

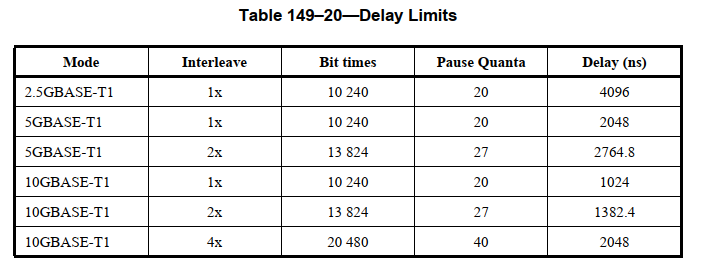

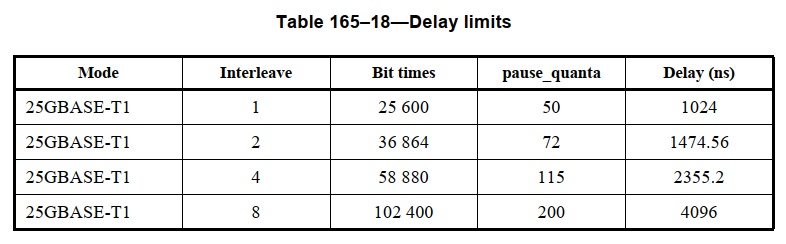

his email below. Tables 149-20 and 165-18 specify “delay limits” and not latency limits. This is the time from when the data arrives at local MII to when it is delivered at the far end MII. “Latency” is a concept above physical layer and out of scope of 802.3

(even though good to look into and understand). Also, please note that the numbers in these tables are “limits”. This implies that this is the max time allowed. The actual time of delivery may be less than the max allowed.

Cheers,

Kamal

To unsubscribe from the STDS-802-3-ISAAC list, click the following link:

https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-ISAAC&A=1

To unsubscribe from the STDS-802-3-ISAAC list, click the following link:

https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-ISAAC&A=1

To unsubscribe from the STDS-802-3-ISAAC list, click the following link: https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-ISAAC&A=1