Peter,

I’m not opposed using OMA as the principal indicator of transmitter performance. I am opposed to reducing the Pave-min spec of 100GBASE-FR1 below that of 100GBASE-DR because it would create interop problems/confusion in the market.

Brian

From: Peter Stassar <Peter.Stassar@xxxxxxxxxx>

Sent: Wednesday, April 8, 2020 5:11 AM

To: Brian Welch (bpwelch) <bpwelch@xxxxxxxxx>

Cc: STDS-802-3-100G-OPTX@xxxxxxxxxxxxxxxxx

Subject: RE: [802.3_100G-OPTX] TX Average Power Min.

Hi Brian,

Even when I understand where you are coming from, I don't quite understand where you want to go with this.

I agree that it’s common to measure average power and use the measurement of extinction ratio to derive OMA.

Despite the fact that I always personally preferred to use average power specs instead of OMA based specs, IEEE 802.3 agreed about 20 years ago in the ae 10 Gb/s Ethernet project to introduce OMA as the principal

indicator of optical power (instead of average power).

Unless you intend to propose to move to average power based specifications, we have to live with the agreement to use OMA as the principal indicator of power.

That automatically implies that all values of average power in our specifications are derived from OMA and ER.

From that point of view I fully support the aim to at least use the same extinction ratio across PMDs in our specifications.

Kind regards,

Peter

From: Brian Welch (bpwelch) [mailto:00000e3f3facf699-dmarc-request@xxxxxxxxxxxxxxxxx]

Sent: Wednesday, April 8, 2020 1:50 AM

To: STDS-802-3-100G-OPTX@xxxxxxxxxxxxxxxxx

Subject: Re: [802.3_100G-OPTX] TX Average Power Min.

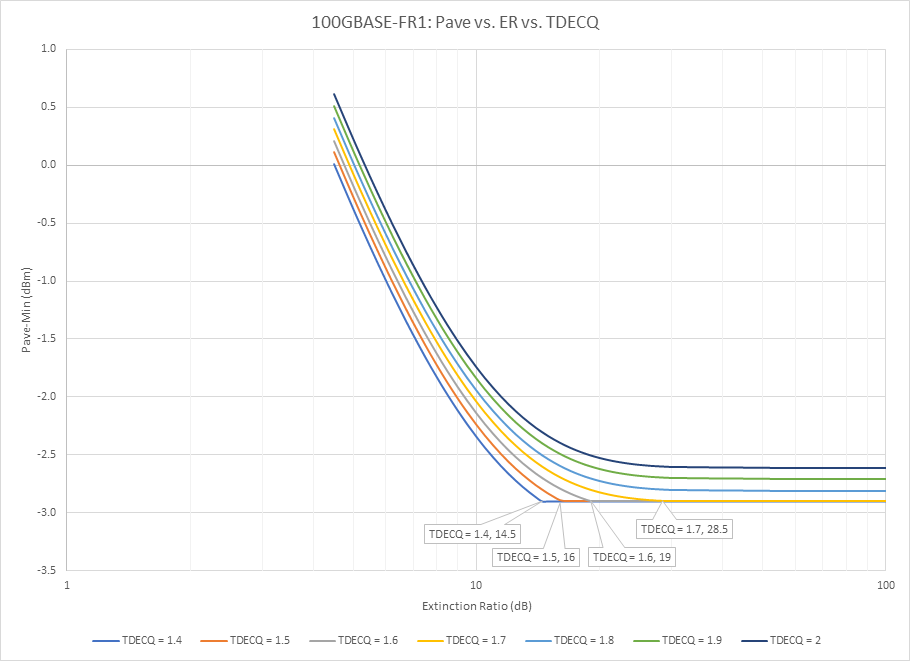

I put together a quick chart, of Pave for different ER and TDECQ conditions:

What it shows is that to take (some) advantage of a reduce Pave-min spec for 100GBASE-FR1 you would need to have a ER >= 14.5 dB and a TDECQ <= 1.7 dB. To get the full 0.3 dB benefit you’d need to have an ER >= 28dB and a TDECQ <= 1.4 dB.

Note that this isn’t the only place that we were conservative with min specs in 100G-DR. The effective min TDECQ (difference between OMA and OMA-TDECQ) was limited to 1.4 dB even though TDECQ values as low as 0.9 dB were demonstrated.

Thanks,

Brian

From: Eric Maniloff <eric.maniloff.ieee@xxxxxxxxx>

Sent: Tuesday, April 7, 2020 2:24 PM

To: Brian Welch (bpwelch) <bpwelch@xxxxxxxxx>

Cc: STDS-802-3-100G-OPTX@xxxxxxxxxxxxxxxxx

Subject: Re: [802.3_100G-OPTX] TX Average Power Min.

Hi all,

I wanted to clarify the reasons for the comment on aligning the Tx average power spec to infinite ER. Most of this was mentioned on the call today, but to reiterate:

Link budgets are based on OMA and TDECQ, not on average power. The average power specs are informative, and designed to allow the widest possible range of transmitter technologies. Clearly the easiest way to do

this is to allow any extinction ratio. This intent is clear in the footnote regarding average power (min) specs:

a) Average launch power (min) is informative and not the principal indicator of signal strength. A transmitter with launch power below this value cannot be compliant…

By specifying the transmitter with finite extinction ratio, this statement is not correct since the normative spec is OMA. In D2.0 Average Power Min (Tx) for: 100GBASE-DR is specified with 10dB ER, 100GBASE-FR1

is specified with 15dB ER, and 100GBASE-LR1 is specified with infinite ER. Because 100GBASE-DR is specified with only a 10dB ER, this spec is being used to limit the range of 100GBASE-FR1 values. If a supplier produces a Tx with a higher ER than these values,

they are required by the current spec to transmit a higher OMA.

The Rx average power min values are set by subtracting the link loss from Tx average power values, again with the footnote:

b) Average receive power (min) is informative and not the principal indicator of signal strength. A received power be-low this value cannot be compliant

This statement is not true for the Rx. If a device transmits with a higher OMA than the minimum specified for the Tx, then a receiver could receive a signal at below the specified minimum average power value that

met the minimum OMA spec. And to be 100% clear: This signal could have come from a compliant transmitter, complying with both the Tx OMA and Average Power Min specs.

The intent of the footnotes is clear: Any signal with power lower than the Average Power Min will have an OMA less than that required by the link budgets.

Having said this, I do understand Brian’s concern. 100GBASE-DR, and 400GBASE-DR4 have been specified with a 10dB ER. This has pushed back on 100GBASE-FR1, in that if the 100GBASE-FR1 spec is changed there could

be a situation where it is transmitted to a 100GBASE-DR Rx, and the Rx raises a Low Power alarm based on the received value despite receiving a compliant signal over a compliant link. It’s worth noting though: this would only happen if a 100GBASE-FR1 transmitter

was produced with an Extinction Ratio > 15dB. In other words, the interop is only a problem if vendors are able to build compliant transmitters that violate the current average power min spec. If we don't think transmitter technologies providing > 15dB ER

can be produced, then there's no interop problem.

So, where does this leave us? We could leave the 100GBASE-FR1 spec alone, which would have the impact of requiring excess margin in high ER transmitters. We're only discussing about 0.3dB delta, so doing this would

not be terribly onerous. If we do this, we should modify the footnotes, since they aren’t currently correct. Alternatively, we could modify the 100GBASE FR1 specs to calculate minimum average power with infinite ER. If we do modify the specs I do think an

effort should be made to modify the DR specs as well. This would not impact any existing DR transmitters, but would suggest that DR receivers change their low power average power alarm threshold.

My preference is to have all of the specs written at infinite ER, but alternatively we could simply modify the notes in the table to be consistent with the current specs.

Eric

On Tue, Apr 7, 2020 at 12:47 PM Brian Welch (bpwelch) <00000e3f3facf699-dmarc-request@xxxxxxxxxxxxxxxxx> wrote:

Picking up this thread to discuss the TX average power min topic.

While it is true that TX average power is not the principal indicator of transmitter performance, it is the most common indicator of transmitter performance, including in the following ways:

- Since it is a DC measurement it is typically used on all parts as part of a production test flow, whereas OMA/ER (which require a HS scope to test) are often just done in DVT, qual, and then on a sampling basis as part of an ORT/ORM process.

- TX average power is also typically what is reported in the memory map (again, because it is easy to measure on chip), and as such is used in network telemetry systems to monitor transmitter performance and aging.

- It is also easy to test in the field, so link debug will generally start with an average optical power measurement and only progress to an OMA/ER measurement if necessary (and then generally require removing the part from the field and returning to the manufacturer).

All of this is to underscore that this is probably one of the most noticed parameters in a spec, and modifying the spec in such a way that you create inconsistencies between 100GBASE-DR and 100GBASE-FR1 would quickly be noticed in the field. I recommend keeping the 100GBASE-FR1 TX average power min spec equivalent to that in 100GBASE-DR.

Thanks,

Brian

From: David Lewis <David.Lewis@xxxxxxxxxxxx>

Sent: Monday, April 6, 2020 4:02 PM

To: STDS-802-3-100G-OPTX@xxxxxxxxxxxxxxxxx

Subject: [802.3_100G-OPTX] 802.3cu - Results of offline comment resolution discussions

All,

In order to increase the speed of comment resolution in our teleconference calls, a group of us met off-line to work through some of the comments that were unresolved during our last call. We came to the following proposed resolutions:

Comment #

Commenter

Topic

Proposed resolution

Note

54

Eric Maniloff

Tx Avg Power

Accept

103

Mike Dudek

Interop

Accept in principle

Max loss of DR channel is 3.0 dB, not 2.6. So proposed value for max loss should increase by 0.4 dB to 5.2 dB.

104

Mike Dudek

Interop

Accept in principle

Max loss of DR channel is 3.0 dB, not 2.6. So proposed value for max loss should increase by 0.4 dB to 4.5 dB.

105

Mike Dudek

Interop

Accept

106

Mike Dudek

Interop

Accept

115

Mike Dudek

Interop

Accept

116

Mike Dudek

Interop

Accept

During tomorrow’s call, we will ask if there are any objections to these resolutions, and if there are none, we will move on to other topics.

Thanks,

David Lewis

To unsubscribe from the STDS-802-3-100G-OPTX list, click the following link: https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-100G-OPTX&A=1

To unsubscribe from the STDS-802-3-100G-OPTX list, click the following link: https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-100G-OPTX&A=1

To unsubscribe from the STDS-802-3-100G-OPTX list, click the following link:

https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-100G-OPTX&A=1

To unsubscribe from the STDS-802-3-100G-OPTX list, click the following link: https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-100G-OPTX&A=1