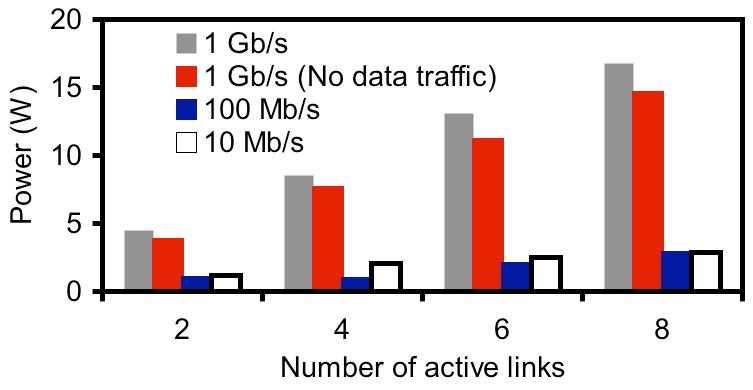

So changing the 10BASE-T spec doesn't sound quite as easy as was mentioned earlier. Further, when we look that the measurements for potential savings (granted they are not precise scientific measurements, but first order):

It just doesn't look like there's much there to be saved when compared to 100BASE-TX. Does anyone have data to suggest otherwise? Remember these measurements are "at the wall"

Thanks,

Mike

Joseph Chou wrote:

Hi Pat, Here is my two pennies. I am guessing that the reason to lower down the output voltage of 10BaseT is to reduce its power consumption so that higher speed phy can be downgrade to 10BaseT. However, even though we modify the standard to allow lower output voltage for 10BaseT, we probably will end up a 10BaseT phy which has comparable power consumption of 100BaseT. It will lose the advantage of speed change. The benefit of changing the spec could turn out to have a new lower power 10BaseT when it drives longest CAT 3 cable thus only 10Mbps can be negotiated successfully. By the same token, so far no one considered adding a Power Back Off mode on 1000BaseT and 100BaseT for shorter cable length because the saving of power may be very marginal. I am afraid that the incentive of changing 10BaseT spec is not as great as devising an electrical idle mode so that all phy modes (10, 100, 1000, 10G) can be switched to it. By the way, in order to meet the template of Fig 14-9 the transmitter normally needs to pre-emphasize the waveform for fat bit (20ns or 2.5MHz carrier). I don't have simulation result at hand so I am not sure for the same amount of pre-emphasis (preset in IC design) used for CAT 3 model test it still fits the template when we use CAT 5 cable model. We may need to consider the attenuation difference between 2.5MHz and 5MHz for both cable models. Best Regards, -Joseph Chou -----Original Message----- From: Pat Thaler [mailto:pthaler@BROADCOM.COM] Sent: Wednesday, March 28, 2007 6:37 PM To: STDS-802-3-EEE@listserv.ieee.org Subject: Re: [802.3EEESG] 10BASE-T question Mike, I think that some adjustment to the 10BASE-T transmit voltage would be entirely appropriate. The 10BASE-T output voltage spec (IEEE 802.3-2005 14.3.1.2.1) currently requires that the driver produce a peak differential voltage of 2.2 to 2.8 V into a 100 Ohm resistive load - a very normal output voltage when the standard was written in the late 80's, but pretty high nearly 20 years later. This voltage allowed 10BASE-T to coexist in bundled Cat 3 cable with analog phone ringers. The transient when an analog phone ringer goes off-line in that situation could produce over 250 mV. That high output voltage is not necessary over Cat 5 or better cable. The simple change would be to add a differential output voltage spec for operation over Cat 5 or better cable. In that case, remove the minimum voltage spec for peak differential voltage into a 100 Ohm resistive load. One still would keep the maximum voltage spec of 2.8 V or perhaps substitute a lower maximum. Change the requirement for the Figure 14-9 output voltage template to be the signal produced at the end of a worst-case Cat 5 cable instead of at the end of the (Cat 3) twisted-pair model. This should be fully backwards compatible with existing 10BASE-T compliant PHYs over Cat 5 cable. The newly specified transmitters will produce a signal over Cat 5 cable that is within the range of signal that the original 10BASE-T produces over the Cat 3 cable channel it specified. That template provides a minimum eye opening of 550 mV. If I plugged the numbers into my calculator correctly, the attenuation difference between Cat 5 and Cat 3 cable at 10 MHz is more than 4 dB so this should allow the transmit voltage to drop by that. It should be very little work to do this change. A more aggressive change that would require real work would be to determine what receive voltage could be tolerated by today's receivers which probably can tolerate a smaller eye-opening especially if they are a 1000BASE-T receiver operating in a slowed down mode. But in that case, one would either need to only use the lower eye-opening when stepped down by EEE or add negotiation for low voltage 10BASE-T to auto-neg because it wouldn't ensure backwards compatiblity with classic 10BASE-T receivers. I think the fully-backwards compatible change would be pretty easy to justify. To summarize, for operation over the channels specified by 100BASE-TX, 1000BASE-T and 10GBASE-T, delete the spec for minimum voltage into a 100 Ohm load and change the test condition for the Figure 14-9 voltage template to be over a worst case 100BASE-TX channel. Regards, Pat At 01:46 PM 3/28/2007 , Mike Bennett wrote:Folks, For those of you who were able to attend the March meeting, you may recall we had a discussion on 10BASE-T (in the context of having a low energy state mode) and what we might change to specify this, which included possibly changing the output voltage. Concern was raised thatthe work required to specify a new output voltage for 10BASE-T would far outweigh the benefit. Additionally, there was a question regardingthe use of 100BASE-TX instead of doing anything with 10BASE-T. Would someone please explain just how much work it would be to change 10BASE-T and what the benefit would be compared to using 10BASE-T with the originally specified voltage or 100BASE-TX for a low energy (aka"0BASE-T" or "sleep") state?Thanks, Mike