Hi Kirsten,

Thanks again for following up.

This brings me to my point about a real-time operating system. Ethernet has an abysmal track record because of the lack of requirements identified at the beginning to prioritize latency.

CAN is widely used braking and engine control applications because it has a predictable maximum latency. It also provides deterministic behavior with priority-based arbitration schemes, ensuring

high-priority messages are sent with a minimal delay, which is critical for time-sensitive applications, as I mentioned. Ethernet, in contrast, needs to develop better methods to handle priority-based arbitration. While TSN functionality can be beneficial,

it falls short when immediate responses are required, failing to provide the necessary latencies customers require.

I have had customers refuse to adopt ethernet for engine, braking, and airbag applications purely because of the missed latency and determinism requirements I mentioned. They would very much

like to connect these to a network. However, they can not eliminate CAN because they lack the ability to identify the critical need for low latency in other applications, which is a crucial aspect of ensuring the safety and reliability of these systems.

I dove into this example above to help stress the importance of latency customers put on real-time safety-critical applications which what 802.3dm plans to address. That is why I am bringing

this lack of requirement to everyone’s attention.

I outlined a scenario in the last email. Would you like me to provide more detail?

Let's take 1 scenario into account to emphasize my point – I am sure many people could attest to it during their commute to work – traffic is heavy. Aggressive drivers try to get to places

on time when they're behind schedule. The system must respond to these changes in the environment as we do. Now add construction, meaning rapid changes to lanes, merging, and surroundings exist. What is the plan for scheduling tasks ahead of

time for these scenarios that rapidly changed and were unaccounted for?

My question was not answered in the last email.

Can you please explain why we are not trying match get close to the latencies that are used by the SERDES in production which are critical for real time operating systems? Why would it not

be in the interest of the committee to push the latency to the lowest achievable number? As I outlined above these are just a few of the scenarios for a system that are making critical decisions that determine outcome of accident or worse and why customers

view them critical for their systems.

Best Regards,

TJ

From: Matheus Kirsten, EE-352 <Kirsten.Matheus@xxxxxx>

Sent: Tuesday, August 13, 2024 4:20 AM

To: STDS-802-3-ISAAC@xxxxxxxxxxxxxxxxx

Subject: [EXTERNAL] [802.3_ISAAC] AW: Need for more Use-Case ad hoc meetings

Hello TJ, thank you for you extensive response. There is no reason to do things the same way as you do them with (an FDD-based) SerDes (a TDD-based SerDes already

organizes some aspects differently) as Ethernet offers different tools. That is

Hello TJ,

thank you for you extensive response. There is no reason to do things the same way as you do them with (an FDD-based) SerDes (a TDD-based SerDes already organizes some aspects differently) as Ethernet offers

different tools. That is like saying, why do not you use Ethernet like CAN? Yes, you might, but you are wasting opportunities and make things unnecessary complicated.

Could you please provide a scenario in which the environment changes so fast that you need all those commands (or at least many) at once? Again, we are not talking about the brake loop. We are talking about

the control loop of the camera, which will provide images also when the imager settings are very slightly suboptimal for a very short time.

As said, I will structure this in more details in a presentation for September. That might be easier than text.

Kind regards,

Kirsten

Von: TJ Houck <thouck@xxxxxxxxxxx>

Gesendet: Montag, 12. August 2024 22:50

An: STDS-802-3-ISAAC@xxxxxxxxxxxxxxxxx

Betreff: Re: [802.3_ISAAC] Need for more Use-Case ad hoc meetings

Hi Kristen,

The scenario you listed is simple and does not deal with the dynamic and rapidly changing environment that occurs in everyday road situations.

Hints the reason customers have strict latency requirements for these rapid changes that can take place.

Imagers do not update with just one command, as you mentioned. It's also important to be aware that there are many other updates that could take place, beyond those you listed. Being prepared for these potential

changes is key.

- HDR functions

- Exposure

- Gain

- Focus adjustment/control

- White balancing

- Gamma correction

- Lens/Dark/Pixel shading compensation

- Noise reduction

- Color correction

- image resolution, digital cropping, pixel binning changes

- frame rate changes – rare but happens

- ROI (Region of Interest) changes

- status – Error status, GPIO – update triggers for interrupts/changes in environment

These updates can take 10s of 100s commands meaning that 100us latency for a single command if it is that even is getting amplified 10x to 100x's more than the suggested requirement. So now times

that 0.03/0.3/3mm x 100s. The customer's algorithms typically control these to help decide the best ML/NNU views. This adds to the processing on top of all these delays for additional distance and is why I have been adamant about the critical factor latencies

play. Once the data is received, it does not instantaneously decide what to do. The processor has to process this data. By the time it realizes what needs to be adjusted, in some cases, 1-100 milliseconds have passed, and now you're burdening the system with

an additional request because it either did not get the appropriate updated commands through in time or did not read what was wrong in time. This means the processor will go through this cycle all over again, which could've been avoided if latencies had been

tightly controlled.

On top of that, a sensor does not get its own private I2C channel. They share this bus. A system cannot have 12 x I2C ports dedicated to each device. You can see that quad deserializers available

in the public market usually have only 2 I2C ports, and some customers will share 1 of these I2C busses with all 4 sensors and possibly other devices such as PMICs, uC/uP, etc. The processors have a limited number of I2C ports available. So now multiply those

100s commands by 2 or 4 or higher if there is traffic on that bus – this could be upwards well over

x1000s to get the necessary commands across in time.

Regarding the GPIO – as mentioned above, the 350m vehicle distance is not the whole gambit of scenarios in the real world. Let's take 1 scenario into account to emphasize my point – I am sure

many people could attest to it during their commute to work – traffic is heavy. Aggressive drivers try to get to places on time when they're behind schedule. The system

must respond to these changes in the environment as we do. Now add construction, meaning rapid changes to lanes, merging, and surroundings exist. What is the plan for scheduling tasks ahead of time for these scenarios that rapidly changed and

were unaccounted for?

Can you please explain why we are not trying match get close to the latencies that are used by the SERDES in production which are critical for real time operating systems? Why would it not be

in the interest of the committee to push the latency to the lowest achievable number? As I outlined above these are just a few of the scenarios for a system that are making critical decisions that determine outcome of accident or worse and why customers view

them critical for their systems.

Best Regards,

TJ

From: Matheus Kirsten, EE-352 <Kirsten.Matheus@xxxxxx>

Sent: Monday, August 12, 2024 8:25 AM

To: TJ Houck <thouck@xxxxxxxxxxx>;

STDS-802-3-ISAAC@xxxxxxxxxxxxxxxxx

Subject: [EXTERNAL] AW: Need for more Use-Case ad hoc meetings

Hello TJ, thank you for bringing up the example with the imager and pointing out the relevance of driving speed. First, I would suggest to make a clear distinction

between the camera control loop and the brake control loop. The brake control

Hello TJ,

thank you for bringing up the example with the imager and pointing out the relevance of driving speed.

First, I would suggest to make a clear distinction between the camera control loop and the brake control loop.

The brake control loop takes (hopefully among other sensors) camera data, processes it in an SoC and sends a brake command to the brakes. There is no loop back to the camera so the delay on the low speed return

channel makes no difference. Only the high speed direction counts. Whether in the high speed direction, the camera data needs 1us overall to travers from camera to SoC or 10 us or even 100 us makes at 110 km/h~=70mph a difference of ~0,27mm/2.97mm that the

car will have moved forward (if I have calculated that correctly). At the same time, the camera-based driver assist algorithms inside the SoC are trained to look up to 350m ahead. I would like to understand better, why 3mm, or 0.3mm versus 0.03 mm matter in

the high-speed direction. I intend to detail this more also in my presentation at the September interim.

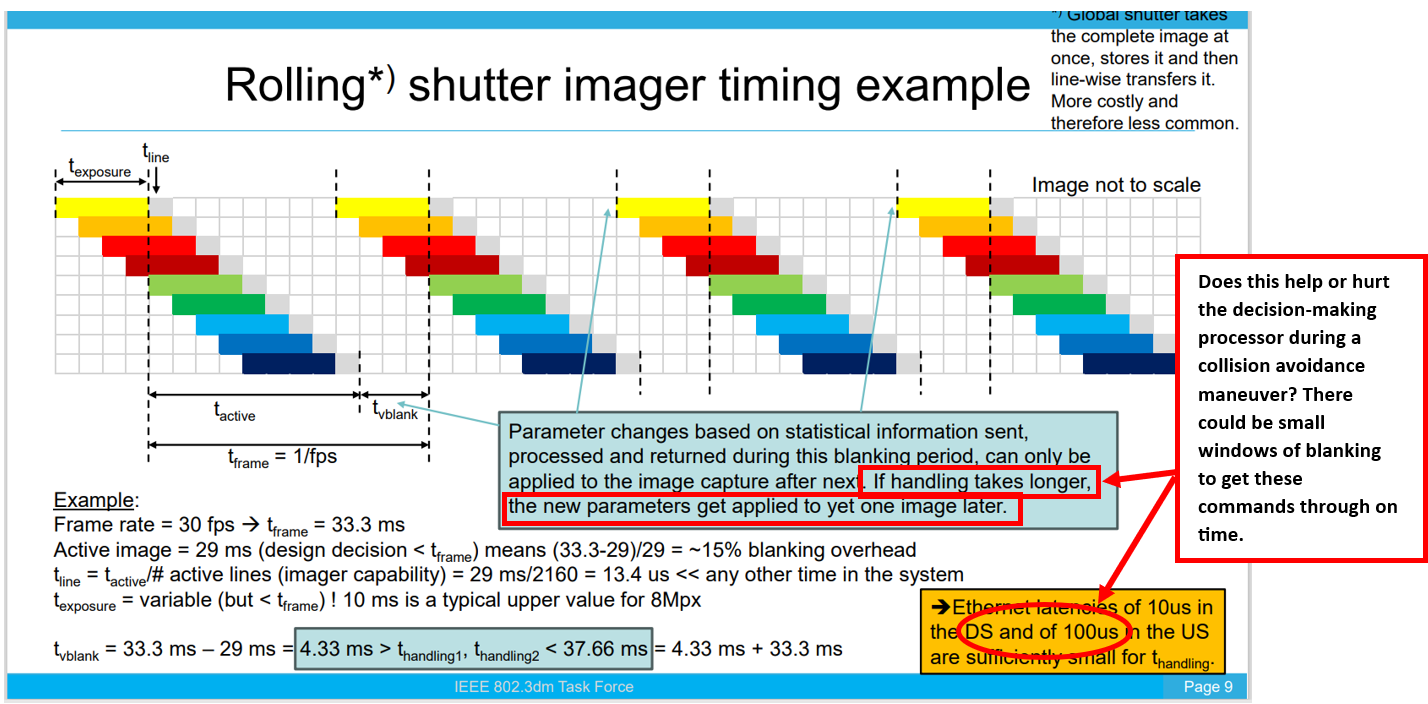

The camera control loop takes statistic data from the imager, processes it in an SoC and sends control information back to the imager that then applies the new parameters. Next to synchronizing various cameras

(which in an Ethernet system can be done with help of the 1AS synched clocks/timestamps, using GPIO triggers for this is unnecessary), examples for items you would like control inside the imager are

- Exposure time

- Gain control

- Automatic white balance

- Reading of status data like temperature and voltage

The three control items have to do with reacting to a change of light. What is the scenario, in which the light changes so much within ~2m driving (the example of 67ms travelled at ~70mph/110km/h) that the

imager data is no longer useful? The uplink transmission time (10us versus ~100us) would anyway only make a difference, if the total control loop for exposure time exceeded the total budget of the blanking period of 4.3ms by 100us-10us = 90us. With camera

data used to look ahead 350m that means 11s travelling time at 70mph to look ahead and prepare.

As said, more to come in September.

Kind regards,

Kirsten

Von: TJ Houck <thouck@xxxxxxxxxxx>

Gesendet: Sonntag, 11. August 2024 17:24

An: STDS-802-3-ISAAC@xxxxxxxxxxxxxxxxx

Betreff: Re: [802.3_ISAAC] Need for more Use-Case ad hoc meetings

|

Sent from outside the BMW organization - be CAUTIOUS, particularly with links and attachments. |

Absender außerhalb der BMW Organisation - Bitte VORSICHT beim Öffnen von Links und Anhängen. |

Hi Scott,

Thanks for following up. If I don't have the patience to respond to this, I am very worried about my future handling my patience with my children, who test me x1000 more on the patience side at times😊.

Your questions are valuable, and I encourage you to continue sharing your thoughts. Please don't feel the need to apologize. I am new, and this is my 1st reflector exchange, but in general, I would be surprised

if these forums were not meant to prompt questioning and understanding, and that is not how I took your previous response.

The main objective of the presentation I gave on latency was to provide a high-level overview and stimulate your thinking about these parameters. I intentionally didn't delve deeper due to time constraints,

but I wanted to spark your interest in the importance of these latencies and their potential use cases. These systems rely on these latencies for critical decisions such as collision avoidance, object detection, and emergency braking at high speeds.

I will leave you with one crucial aspect to consider, and I hope this addresses some of the questions you raised.

This means you now lost 33.33msecs for a 30FPS system, which means you have an additional 33.3msecs for the next frame. This now totals 66.7msecs, meaning the vehicle is moving 70mph (a standard interstate

speed limit in the U.S. in many locations) – 6.8 feet additional vs 3.42 feet if those other commands got to the sensor on time. I get different aspects of this decision, but the question would get directed to me as a system engineer after a severe accident.

Did you give the vehicle the best opportunity to respond accordingly for the communication link or was the system burden with unnecessary latencies that did not allow the processor to get that necessary information in time? Even worse, a simulation would show

this latency difference was the determining factor in the accident's severity.

I mentioned the audio shortcomings of ethernet, as this hit home for me during my Tier 1 days. There are a lot of similarities to this, and the latencies are needed for real-time operating applications. The

only difference here is that this system will make decisions that could determine a no/minor injury, severe injury, or loss of life scenario. System architects have to ask ourselves, did I give the processor its best chance to process the information in time

and allow the system to make the necessary updates, or did I burden the system with unnecessary latency and not give it the best chance?

I appreciate your engagement and will address your other questions in a separate email. Your active participation is crucial for our collective understanding of this complex topic.

Best Regards,

TJ

From: Scott Muma <00003414ca8b162c-dmarc-request@xxxxxxxxxxxxxxxxx>

Sent: Friday, August 9, 2024 4:04 PM

To: STDS-802-3-ISAAC@xxxxxxxxxxxxxxxxx

Subject: [EXTERNAL] Re: [802.3_ISAAC] Need for more Use-Case ad hoc meetings

Hi TJ, Thanks for the response, it’s good to hear that I am likely misunderstanding the message you intended to convey. However, I likely remain confused, so will

explain my interpretation of some of the points and some questions it raised

Hi TJ,

Thanks for the response, it’s good to hear that I am likely misunderstanding the message you intended to convey. However, I likely remain confused, so will explain my interpretation

of some of the points and some questions it raised for me. Your continued patience is greatly appreciated.

First in the processor to camera/sensor direction:

Slide 6 “Latency Requirements”

- 10us hard limit for a GPIO trigger or I2C command for functional safety

- 1-2us limit on GPIO trigger events

- SERDES already achieves these latency requirements

Slide 7 “Latency and Jitter Application Diagram”

- Trigger latency of <1-2us ideal

- Schedule events if link cannot achieve <1-2us

- Diagram illustrates rising edge of GPIO at processor to rising edge of GPIO at sensor having delay of 1-2us to achieve

GPIO jitter of 1-2us

- Diagram illustrates rising edge of GPIO at processor to rising edge of GPIO at sensor having delay of <10us with PTP to

achieve GPIO jitter of 1-2us

Slide 9 “Summary”

- It is proposed to limit the latency to 10us worst case in the switch to camera direction

Questions:

- Slides 6 and 7 seem to be GPIO input to output delay when referring to latency. Is the latency limit proposed on slide

9 also GPIO input-output delay or something else?

- For I2C commands is the 10us hard limit based on the clock stretching for the entire round-trip of the transaction or

how would it be detected as a functional safety violation? If a system works a certain way then I can understand why this processor<>sensor round-trip time is critical and performance-limiting, but systems can work other ways to decouple the overall round

trip time from the clock stretching to get better performance and compensate for the round-trip-time/network latency.

- Given synchronization methods such as PTP and ability to schedule events like frame synchronization with low skew and

low jitter at multiple sensors, what latency could be tolerated? 10us is mentioned, but I am interested in what really drives this limit… if it’s 50us or 100us, but with very low jitter/skew on the frame synch across sensors when does the processor start

to be impacted?

- Given a proposed latency limit of 10us in the topology shown on slide 7, is it possible to add an Ethernet switch between

the Switch and Bridge without pushing the latency beyond the 10us hard limit? Or should the 10us limit apply up to a certain number of hops, or be understood differently?

Second in the camera/sensor to processor direction:

Slide 6 “Latency Requirements”

- <1.0us latency limit from sensor to switch

- <1.0us latency limit on video channel from sensor to switch

Slide 8 “Latency Requirements”

- PCS to PCS block should not exceed 1us for 10Gbps

- Diagram shows the SOP to SOP delay at the xMII of the bridge/switch should be <1us

Slide 9 “Summary”

- It is proposed to limit the latency to

… 1us worst case in the camera to switch direction.

Questions:

- Slide 8 says latency, but describes delay on a very limited part of the link, how does this relate to the <1us latency

limit in slide 6?

- How could an Ethernet switch be inserted between the Bridge and Switch without exceeding the 1us latency limit in slide

6? Or is higher latency acceptable in this case?

- If higher latency from sensor to processor is acceptable, then the information on Slide 6 seems out of context. Is it

possible to provide this context or to give a clearer requirement on the sensor to processor latency?

I would not yet claim that 10us switch->camera and 1us camera->switch are not the true requirements, but these requirements will severely constrain the valid solutions and

I am concerned it will take a networked topology off the table. --

May I ask how you concluded that this is not a true requirement and how this would directly impact network solutions, as this is a different requirement than I have described?

Apologies if the wording was unclear. I was saying that I am not yet convinced one way or the other if these are the requirements needed to meet the overall application

requirements. Hopefully my questions above clarify why I’m concerned about the impact on a networked solution. I would be interested to understand how this is different from the requirement that you have described given that Ragnar referred below to these

as your “proposed requirements”.

Similarly, if we state that the requirements are precisely the observed behavior of a point-to-point connection, then connecting the camera to processor over a network may

not be possible/economical. –-

This sounds like you’re describing two different requirements. The requirements I addressed directly reflect latency when communicating to sensors. The latency you’re describing is when this information wants to be passed into the

network, which is a different requirement than I described. I would ask why we could not simply add other latency requirements for other network applications, add what you’re concerned about, and encourage you to share information about these.

Perhaps this is getting to the root of our difference in understanding. I suspect that some of the group (at least me) understands the proposed requirements to

be requirements derived from the processor<>camera interaction and overall application requirements independent of the specific network topology/implementation. For example, if we have one requirement that says the system requires GPIO input-output delay

must be <10us when point-to-point and another requirement that says it must be <100us over a network, then it means we require a PHY that supports the <10us case. However, it seems unlikely and undesirable that the system would have different requirements

based solely on network topology and so that is why this approach is likely to result in an overconstrained PHY. On the other hand if we could say that the GPIO input-output delay can be up to 200us, but the skew/jitter at the output across multiple sensors

is <1us for all topologies, then we can derive a looser PHY delay requirement and have much greater flexibility in making tradeoffs that can reduce the PHY complexity/cost/power, etc. which I understand to be some of the reasons for 802.3dm and what differentiates

it from existing Ethernet PHYs.

Best Regards,

Scott

From: TJ Houck <thouck@xxxxxxxxxxx>

Sent: Thursday, August 8, 2024 7:34 PM

To: STDS-802-3-ISAAC@xxxxxxxxxxxxxxxxx

Subject: Re: [802.3_ISAAC] Need for more Use-Case ad hoc meetings

|

You don't often get email from

thouck@xxxxxxxxxxx.

Learn why this is important |

EXTERNAL EMAIL:

Do not click links or open attachments unless you know the content is safe

Hi Scott,

Thanks for the follow up. However, I don’t follow how this limits ethernet functionality, nor did my presentation say this was the only requirement. The applications I brought up were to propose limits on

what the SERDES solutions address for their customers today. The presentation aimed to share how automotive ADAS systems are connected to sensors-bridge and Switch-processor. The GPIOs are used as critical trigger events for various applications, and latency

is a crucial reason why SERDES solutions are used today since they address these needs desired by customers.

I would not yet claim that 10us switch->camera and 1us camera->switch are not the true requirements, but these requirements will severely constrain the valid solutions and

I am concerned it will take a networked topology off the table. --

May I ask how you concluded that this is not a true requirement and how this would directly impact network solutions, as this is a different requirement than I have described?

Similarly, if we state that the requirements are precisely the observed behavior of a point-to-point connection, then connecting the camera to processor over a network may

not be possible/economical. –-

This sounds like you’re describing two different requirements. The requirements I addressed directly reflect latency when communicating to sensors. The latency you’re describing is when this information wants to be passed into the

network, which is a different requirement than I described. I would ask why we could not simply add other latency requirements for other network applications, add what you’re concerned about, and encourage you to share information about these.

I believe Kirsten tried to make this point in the call, --

I must’ve missed when this was brought up.

Best Regards,

TJ

From: Scott Muma <00003414ca8b162c-dmarc-request@xxxxxxxxxxxxxxxxx>

Sent: Thursday, August 8, 2024 2:35 PM

To: STDS-802-3-ISAAC@xxxxxxxxxxxxxxxxx

Subject: [EXTERNAL] Re: [802.3_ISAAC] Need for more Use-Case ad hoc meetings

Hi Ragnar, Max, I would also like to have more use case discussions, and appreciate your contributions so far. However, it would be useful to separate the behavior

of specific implementations from the system/application requirements. TJ’s

Hi Ragnar, Max,

I would also like to have more use case discussions, and appreciate your contributions so far. However, it would be useful to separate the behavior of specific implementations

from the system/application requirements.

TJ’s presentation made latency/delay understandable through diagrams, however, I understood the presentation was describing the behavior of a specific implementation.

To take this to an extreme, if we hypothetically connect a processor directly to an imager we could observe the behavior of that implementation and it might “require” even lower

latency because of decisions made by the implementer even if the overall application has no direct requirement for such low latency. If we accept such requirements then there is no possible alternative but direct connection between camera and ECU.

Similarly, if we state that the requirements are precisely the observed behavior of a point-to-point connection, then connecting the camera to processor over a network may not

be possible/economical. I believe Kirsten tried to make this point in the call, and if there is no network possible then Ethernet may be burdening the solution to the point that it can’t even achieve the point-to-point case at similar cost/power/latency.

So to Max’s point on the call, I don’t expect anyone is against a solution that supports a networked topology (since that is the point of Ethernet), but overconstraining the valid solutions will prevent a networked topology.

I would not yet claim that 10us switch->camera and 1us camera->switch are not the true requirements, but these requirements will severely constrain the valid solutions and I

am concerned it will take a networked topology off the table.

Best Regards,

Scott

From: Ragnar Jonsson <rjonsson@xxxxxxxxxxx>

Sent: Thursday, August 8, 2024 8:57 AM

To: STDS-802-3-ISAAC@xxxxxxxxxxxxxxxxx

Subject: [802.3_ISAAC] Need for more Use-Case ad hoc meetings

EXTERNAL EMAIL:

Do not click links or open attachments unless you know the content is safe

Hi Max and all,

At the end of yesterday’s meeting Max asked if we should have more Use-Case ad hoc meetings before the September meeting. There was a problem with my microphone, so you probably did not hear my comment. I

think that we obviously need to have more Use-Case ad hoc meetings before the September meeting.

While yesterday’s ad hoc was a good start, we did not even have time to finish going over your proposed definitions of delay vs latency. Kirsten has already sent a follow-up email, highlighting the need for

finishing that discussion.

I think that we need a deeper dive on the latency/delay requirements. There was a factor of 10 difference in the two proposed latency requirements presented in Montreal:

Kirsten presented

https://www.ieee802.org/3/dm/public/0724/matheus_dm_02b_latency_07152024.pdf

On slide 3 it states “It provides concrete examples of latency and latency requirements in a camera system.”

On slide 9 it states “Ethernet latencies of

10us in the DS and of 100us in the US are sufficiently small …”

TJ presented

https://www.ieee802.org/3/dm/public/0724/houck_fuller_3dm_01_0724.pdf

On slide 9 it states “It is proposed to limit the latency to

10us worst case in the switch to camera direction and 1us worst case in the camera to switch direction.”

TJ told us that these requirements are based on our conversations with multiple OAMs and with the ADAS SoC vendors.

There are also other issues that were brought up in Montreal related to Use-Cases that need further discussion.

In summary, we clearly need more Use-Case ad hoc meetings.

Ragnar

To unsubscribe from the STDS-802-3-ISAAC list, click the following link:

https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-ISAAC&A=1

To unsubscribe from the STDS-802-3-ISAAC list, click the following link:

https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-ISAAC&A=1

To unsubscribe from the STDS-802-3-ISAAC list, click the following link:

https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-ISAAC&A=1

To unsubscribe from the STDS-802-3-ISAAC list, click the following link:

https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-ISAAC&A=1

To unsubscribe from the STDS-802-3-ISAAC list, click the following link:

https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-ISAAC&A=1

To unsubscribe from the STDS-802-3-ISAAC list, click the following link:

https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-ISAAC&A=1

To unsubscribe from the STDS-802-3-ISAAC list, click the following link:

https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-ISAAC&A=1

To unsubscribe from the STDS-802-3-ISAAC list, click the following link: https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-ISAAC&A=1