Steve,

That is entirely possible.

☺

That said, if the specification says you must report a FAIL if the power level is below a certain threshold, doesn’t that limit your freedom just a bit? Doesn’t it require you to indicate it’s not a valid

signal? If not – if it’s wide open to ignore that – then why do we even include it?

☺

Assuming it does matter, the chain of logic you just constructed would seem to suggest we should be selecting a power level threshold here that is lower than the lowest value we might expect to be successful

and rely on those other various checks that occur in a device to weed out something that’s just noise (instead of using the power level to do so). Which is what I’m advocating for.

☺

Thanks.

Matt

From: "Trowbridge, Steve (Nokia - US)" <steve.trowbridge@xxxxxxxxx>

Date: Monday, July 6, 2020 at 4:04 PM

To: Matt Schmitt <m.schmitt@xxxxxxxxxxxxx>, "STDS-802-3-DWDM@xxxxxxxxxxxxxxxxx" <STDS-802-3-DWDM@xxxxxxxxxxxxxxxxx>

Subject: RE: [802.3_DWDM] Implications of SIGNAL_DETECT = FAIL

Hi Matt,

I feel like you’re taking this all too seriously

😊

First of all, the standard is written as a kind of model intended to illustrate the behavior expected to be exhibited by an implementation. The standard is not written in a way to actually describe an implementation.

The model is decomposed across a set of sublayers that are traditionally used in Ethernet standards, but except where there are physically instantiated interfaces identified, those sublayers do not need to

correspond to components in an implementation.

Any real implementation of a 100GBASE-ZR PHY is going to include a coherent DSP. That DSP, in the Rx direction, will take as its input the output of a pair of ADCs, and will produce as its output a CAUI-4

or a 100GAUI-n. So buried inside of that DSP is part of the clause 154 PMD sublayer, the entirety of the clause 153.3 100GBASE-ZR PMA sublayer, the entirety of the clause 153.2 SC_FEC sublayer, and in the case of CAUI-4 output, a clause 83 PMA, and in the

case of a 100GAUI-n output, a clause 152 inverse RS-FEC sublayer followed by a clause 135 PMA to wind down to the right lane count.

So coming out of the “top” of your coherent DSP, you will have some kind of PMA:IS_SIGNAL.indication which, based on the cascade of different sublayer checks described in the model, should have your real implementation

saying “FAIL” when any of the elements the standard described in one of its hypothetical sublayers would have figured out that there was something wrong with the signal that should cause it to report “FAIL” to the sublayer above.

Does your coherent DSP actually do the checks in the manner and in the order described in the standard? Don’t know, don’t care, as long as in aggregate, it reports “FAIL” whenever the model would have reported

“FAIL”. And since there is no physically instantiated interface at the “stream of DQPSK symbol” point between clause 154 and clause 153.3, there isn’t any exposed point where you could even tell what the value of the PMD:IS_SIGNAL.indication primitive was.

The first time you could possibly see an IS_SIGNAL.indication primitive value would be several sublayers higher.

But even if you could decompose an implementation exactly according to the way the sublayers are described in the standard, you do have to view the whole thing as a cascade of checks. Each sublayer

is giving an indication to the sublayer above “as far as I can tell, the signal I am providing to you is good”.

There are certain things that because of the nature of what a sublayer does, are going to get checked. The PMD sublayer will not only be concluding it is getting enough light to receive a signal, but it can

tease out something from that light that looks enough like a pair of DQPSK symbol streams so it can recover them and pass them to the sublayer above. That set of DQPSK symbols may or may not represent a valid Ethernet signal. The PMD isn’t required to check,

for example, that the bits encoded in the DQPSK symbols can be disinterleaved into 20 lanes where you’ll find proper FAS on each lane. Even if you do find FAS on each lane, no guarantee that it reassembles into something that looks like an OTU4 frame with

GMP overhead, and even if it does, and you can pull a stream of 66B blocks out of it and round-robin distribute them, no guarantee that those have PCS lane markers on them, which you may not discover until you get to the PCS. At each stage, you can imagine

a sort of misbehaved signal that would pass the checks described for the lower sublayers but would fail the checks at sublayers above. Not clear that there are any of these misbehaved signal formats you would ever encounter in real life, but they could be

constructed.

Beyond the stuff that inherently must be checked by a sublayer for the sublayer to do its job, the standard tries not to be overly prescriptive about what a sublayer is or is not allowed to check. Do whatever

works for your implementation, with the only real bar being that you never report “FAIL” if you are actually receiving a valid Ethernet signal.

Regards,

Steve

From: Matthew Schmitt <m.schmitt@xxxxxxxxxxxxx>

Sent: Monday, July 6, 2020 2:57 PM

To: STDS-802-3-DWDM@xxxxxxxxxxxxxxxxx

Subject: Re: [802.3_DWDM] Implications of SIGNAL_DETECT = FAIL

Leon,

Thanks for the additional follow up. I spent some more time with the various relevant portions of the spec, and am starting to understand it a bit better; thanks for bearing with me.

Based on that, it seems like even if SIGNAL_DETECT is set to OK based on the presence of noise energy, at the PMA and/or FEC layers the device/system will detect that it’s not a valid signal and stop there.

Whereas if SIGNAL_DETECT is set to FAIL, even if there’s actually a valid signal the system won’t work. That would seem to suggest we want to bias things toward a lower threshold for SIGNAL_DETECT, so that we allow valid low power signals to work, and just

rely on higher layers to deal with a false OK due to a high noise floor.

Thoughts? Am I still missing something?

Thanks.

Matt

From: Leon Bruckman <leon.bruckman@xxxxxxxxxx>

Date: Saturday, July 4, 2020 at 10:25 PM

To: Matt Schmitt <m.schmitt@xxxxxxxxxxxxx>, "STDS-802-3-DWDM@xxxxxxxxxxxxxxxxx" <STDS-802-3-DWDM@xxxxxxxxxxxxxxxxx>

Subject: RE: [802.3_DWDM] Implications of SIGNAL_DETECT = FAIL

Hi Matt,

According to D2.0 clause 153.2.1 SIGNAL_OK is conditioned in its way to the MAC by fec_align_status: “The SIGNAL_OK parameter of the FEC:IS_SIGNAL.indication primitive can take one of two values:

OK or FAIL. The value is set to OK when the FEC receive function has identified codeword boundaries as indicated by fec_align_status equal to true. That value is set to FAIL when the FEC receive function is unable to reliably establish codeword boundaries

as indicated by fec_align_status equal to false. When SIGNAL_OK is FAIL, the rx_bit parameters of the FEC:IS_UNITDATA_i.indication primitives are undefined.”

Something similar is also found in the text of clause 152.2.

Also, trowbridge_3ct_01_200528.pdf showed the probability of false synchronization to be insignificant.

Hope this helps,

Leon

From: Matthew Schmitt [mailto:m.schmitt@xxxxxxxxxxxxx]

Sent: יום ה 02 יולי 2020 23:55

To: STDS-802-3-DWDM@xxxxxxxxxxxxxxxxx

Subject: Re: [802.3_DWDM] Implications of SIGNAL_DETECT = FAIL

In continuing to think about this, I realized I should also have asked about the implications of the converse here.

Pete’s explanation of what happens when SIGNAL_DETECT is set to FAIL was helpful, but what if SIGNAL_DETECT is set to OK when in fact there isn’t a good signal? What are the implications there?

My assumption is that while the device might set that primitive to OK – meaning it thinks there’s a signal there – at some other point in the process of receiving the signal there will be another indicator

that in fact there isn’t a valid signal present, and that will cut things off. If not, then I definitely see where that could be a major issue.

Thanks.

Matt

From: Matt Schmitt <m.schmitt@xxxxxxxxxxxxx>

Reply-To: Matt Schmitt <m.schmitt@xxxxxxxxxxxxx>

Date: Tuesday, June 30, 2020 at 12:18 PM

To: "STDS-802-3-DWDM@xxxxxxxxxxxxxxxxx" <STDS-802-3-DWDM@xxxxxxxxxxxxxxxxx>

Subject: Re: [802.3_DWDM] Implications of SIGNAL_DETECT = FAIL

Leon and Pete,

Thanks much for the clarification – that’s more or less what I suspected, but I wanted to confirm that I wasn’t coming to an incorrect conclusion.

In that case, it seems like we would want to bias things to not set SIGNAL_DETECT to FAIL if there is, in fact, a valid signal. In looking at the proposed response to the various comments that touch on this

topic – which seems to go in the other direction, but with a TBD note about this case – I will be very interested in seeing how such a proposal would address this issue. Especially since sensitivity down to -30 dBm is critical for the MSO access network business

case, which is a key part of the broad market potential.

Thanks again.

Matt

From: "pete@xxxxxxxxxxxxxxx" <pete@xxxxxxxxxxxxxxx>

Date: Tuesday, June 30, 2020 at 1:22 AM

To: Matt Schmitt <m.schmitt@xxxxxxxxxxxxx>, "STDS-802-3-DWDM@xxxxxxxxxxxxxxxxx" <STDS-802-3-DWDM@xxxxxxxxxxxxxxxxx>

Cc: 'Leon Bruckman' <leon.bruckman@xxxxxxxxxx>

Subject: RE: [802.3_DWDM] Implications of SIGNAL_DETECT = FAIL

CableLabs WARNING: The sender of this email could not be validated and may not match the

person in the "From" field.

Matt,

The value of the SIGNAL_DETECT variable is passed upwards in the stack via the PMD:IS_SIGNAL.indication primitive. See 154.2.

This signal is passed through successive layers above this as shown in Figure 80-4a.

The PMA sublayer combines the signal passed to it by the PMD sublayer with its own logic (SIL) as described in Figure 83-5 and the text below it. The combined signal is passed to the SC-FEC sublayer via PMA:IS_SIGNAL.indication

primitive.

The SC-FEC sublayer uses this signal to set the variable signal_ok (see 153.2.4.1.1):

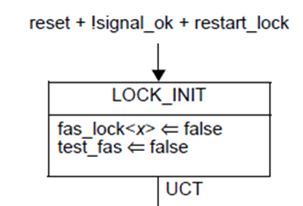

This variable is used in Figure 153-7 (SC-FEC synchronization state diagram) where it is one of the conditions causing the synchronization process to restart.

As a consequence of the above, if the PMD sublayer sets SIGNAL_DETECT to FAIL, the link is brought down.

Regards,

Pete Anslow (pete@xxxxxxxxxxxxxxx)

From: Leon Bruckman <leon.bruckman@xxxxxxxxxx>

Sent: 30 June 2020 07:03

To: STDS-802-3-DWDM@xxxxxxxxxxxxxxxxx

Subject: Re: [802.3_DWDM] Implications of SIGNAL_DETECT = FAIL

Hi Matt,

I think you are right. According to 802.3 clause 91.2: When SIGNAL_OK is FAIL, the rx_bit parameters of the FEC:IS_UNITDATA_i.indication primitives are undefined.

This implies that if this signal is FAIL data is not reliable. According to Table 154-5, there are very specific conditions for SIGNAL_OK to be set to OK or FAIL, while for other conditions (last

row in the table) it is unspecified, so in my opinion it may be set to FAIL or OK, but you can not rely on it.

To summarize: For the data to flow normally SIGNAL_OK must be set to OK. Note that this was the reason for changing the 802.3ct framing scheme (avoid frequent frame losses). You can see the details

in trowbridge_3ct_01_200528.pdf.

Best regards,

Leon

From: Matthew Schmitt [mailto:m.schmitt@xxxxxxxxxxxxx]

Sent: יום ב 29 יוני 2020 21:18

To: STDS-802-3-DWDM@xxxxxxxxxxxxxxxxx

Subject: [802.3_DWDM] Implications of SIGNAL_DETECT = FAIL

One of the key issues I believe that we as a group are going to need to resolve to move 802.3ct forward is the definition of when to set SIGNAL_DETECT to FAIL.

As a part of that discussion, it would help me to better understand what happens when SIGNAL_DETECT is set to FAIL. My assumption is that a device would use that to determine that there was not a valid signal

and therefore could not operate. However, I’m also aware enough to know that I’m making an assumption which may or may not be correct. Therefore, it would help me greatly if some of those that know 802.3 better than I could help me understand the implications

to the system for setting SIGNAL_DETECT to FAIL, particularly when there is in fact a valid signal present.

Thanks for any help.

Matt

To unsubscribe from the STDS-802-3-DWDM list, click the following link:

https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-DWDM&A=1

To unsubscribe from the STDS-802-3-DWDM list, click the following link:

https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-DWDM&A=1

To unsubscribe from the STDS-802-3-DWDM list, click the following link:

https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-DWDM&A=1

To unsubscribe from the STDS-802-3-DWDM list, click the following link:

https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-DWDM&A=1

To unsubscribe from the STDS-802-3-DWDM list, click the following link:

https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-DWDM&A=1

To unsubscribe from the STDS-802-3-DWDM list, click the following link: https://listserv.ieee.org/cgi-bin/wa?SUBED1=STDS-802-3-DWDM&A=1